Meta Debuts Matrix to Scale Multi-Agent AI Data

Meta has a data problem. It’s the same problem every major AI lab is facing: the internet is running out of high-quality training text. So Meta is building a system to create its own, and it’s called Matrix.

This isn’t about scraping more forums. It’s about generating novel data by making thousands of AI agents interact with each other in complex simulations. But that process creates a firehose of unstructured information. Matrix is the plumbing, the traffic control, and the library card catalog designed to manage that chaos at a scale few can comprehend.

The Multi-Agent Mess

Training a single large language model is a compute-intensive task. Training a society of them is a data logistics nightmare. When you spin up thousands of AI agents to debate, collaborate, or play games, they generate an immense volume of interaction data—conversations, decisions, and outcomes. The challenge, a problem detailed in a technical paper released by Meta’s AI division, is that this raw data is messy, uncorrelated, and difficult to feed back into a training pipeline efficiently.

Without a dedicated infrastructure, researchers are left sifting through petabytes of disconnected logs. It’s slow. It’s expensive. You can’t easily ask questions like, “Show me every simulation where an agent successfully negotiated a multi-step task.” The signal gets lost in the noise, which severely limits the utility of these complex, and very expensive, simulations.

A Central Nervous System for Data

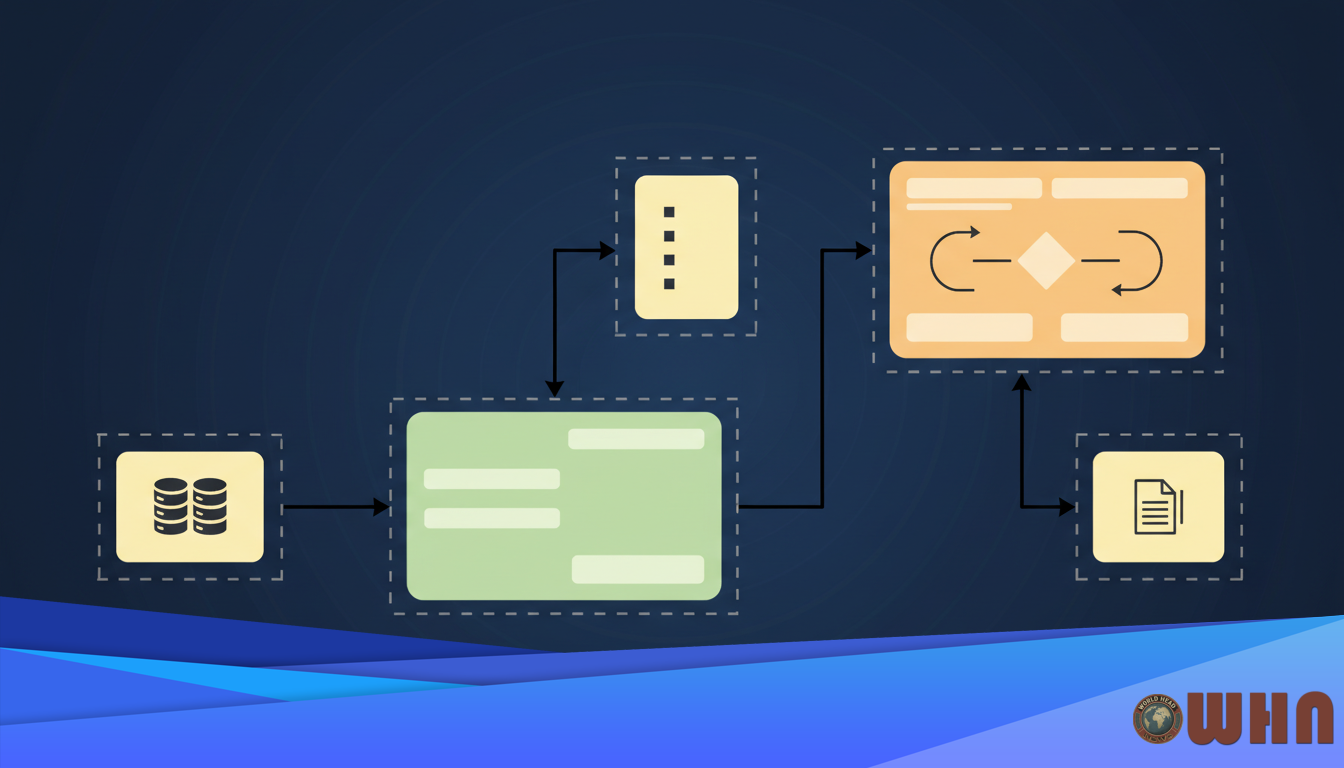

Matrix is engineered to be a high-throughput, low-latency data bus specifically for these multi-agent ecosystems. It’s not a model itself. Think of it as a foundational layer, an operating system for synthetic data generation. According to Meta’s AI blog, the system is built around a centralized scheduler that coordinates interactions and a distributed logging protocol that captures every event with rich metadata.

Here’s how it works. Each action an agent takes—from sending a message to modifying code—is packaged as an “event” and tagged. These tags include the agent’s ID, its current goal, the state of the simulation, and a timestamp accurate to the microsecond. This structured data is then streamed into a central repository. The system, per the documentation, is designed to handle a throughput of over 5 million events per second, ensuring the data capture process itself doesn’t become the bottleneck. Meta claims this architecture allows researchers to “query the complete history of an agent society” in near real-time, a task that was previously impossible.

Feeding the Llama Ecosystem

So why build all this? The goal is to create better AI. Specifically, to feed the next generation of Meta’s Llama models. The web is a powerful source of information, but it’s passive. Multi-agent simulations, in contrast, can be designed to generate data that teaches specific skills like reasoning, persuasion, and long-term planning.

By using Matrix to orchestrate these digital societies, Meta can essentially create bespoke training datasets on demand. If they want to improve Llama’s coding abilities, they can simulate a million AI software engineers working on a project. If the goal is better conversational AI, they can simulate complex social scenarios. It’s a move from finding data to farming it.

We saw the need to transition from static datasets to a living data engine. Matrix is the circulatory system we built to power it, allowing us to generate and refine training data at a velocity that keeps pace with our model development.

This approach, however, has its own set of challenges. The risk is creating models trained on sterile, synthetic data that lack the chaotic, unpredictable nuance of human-generated text. The models could develop their own strange logic—an “AI culture”—that doesn’t align with the real world. Meta acknowledges this, stating in its paper that a key area of ongoing research is ensuring “synthetic data diversity and grounding it with curated real-world information.”

Ultimately, Matrix is a massive infrastructure investment. It’s Meta betting that the future of AI won’t be won simply by building bigger models, but by building a better factory to produce the data that feeds them. The system’s success won’t be measured in throughput or latency figures. It will be measured by the capabilities of Llama 4. The initial version of Matrix, according to the project’s roadmap, is slated to be fully integrated with Meta’s primary AI training clusters before the end of the year.